I spent $1 billion and all I got was a Rubik's cube

Art: David Hinnebusch

Last week, I saw this tweet come across my timeline:

This is an unprecedented level of dexterity for a robot, and is hard even for humans to do.

The system trains in an imperfect simulation and quickly adapts to reality: openai.com/blog/solving-r…

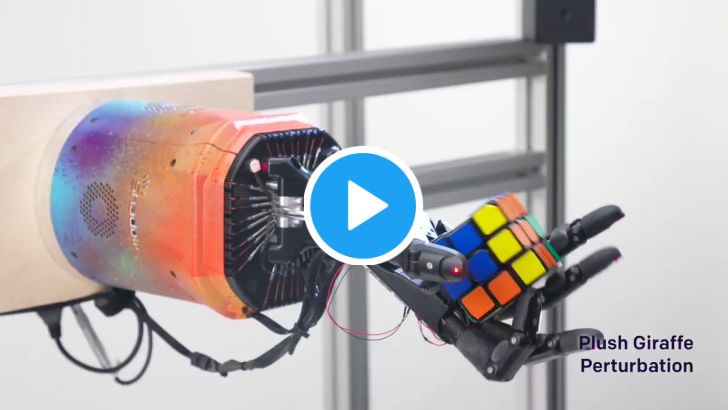

Robots manipulating Rubik’s cubes? Neural networks? The hand is called Dactyl? Sweet. I took a two-second glance and retweeted the follow-up tweet, which includes a fun visual prop.

A couple days later, there was the following, in response to the press release:

Please zoom in to read & judge for yourself.

This kicked off a huge controversy around the release of the announcement and what OpenAI claimed versus what the general public might perceive they’re claiming.

The original Rubik’s cube post says,

Since May 2017, we’ve been trying to train a human-like robotic hand to solve the Rubik’s Cube. We set this goal because we believe that successfully training such a robotic hand to do complex manipulation tasks lays the foundation for general-purpose robots. Solving a Rubik’s Cube one-handed is a challenging task even for humans, and it takes children several years to gain the dexterity required to master it. Our robot still hasn’t perfected its technique though, as it solves the Rubik’s Cube 60% of the time (and only 20% of the time for a maximally difficult scramble).

Upon reading further, as a very good Next Web summary of the controversy explains,

Based on this title, you’d be forgiven if you thought the research discussed in said article was about solving Rubik’s Cube with a robot hand. It is not.

Don’t get me wrong, OpenAI created a software and machine learning pipeline by which a robot hand can physically manipulate a Rubik’s Cube from an ‘unsolved’ state to a solved one. But the truly impressive bit here is that a robot hand can hold an object and move it around (to accomplish a goal) without dropping it.

There were tons of online discussions and Reddit threads about what happened. I was also interested in the truth between these two different accounts, so I started investigating to see if I could find out more and figure out for myself whether the cube was hype or not, but I got sidetracked when I started reading up on OpenAI.

I’d heard of OpenAI before, but I haven’t ever looked into it too deeply. And, as is usually the case, I went down a very weird and tech-y rabbit hole.

The non-profit was founded by Elon Musk (yes, of the Russian meme fame), Infosys - an IT consulting and outsourcing firm , Y Combinator’s Sam Altman (who worked for Paul Graham), former head of LinkedIn Reid Hoffman, Peter Thiel (no Normcore post yet but it’s only a matter of time) , and Amazon Web Services. It’s headed by Ilya Sutskever, who worked with AI luminaries Geoffrey Hinton and Andrew Ng before a stint at Google.

The idea came out of Musk’s personal conviction that robots are going to kill us all. More precisely, “a fleet of artificial intelligence-enhanced robots capable of destroying mankind,” according to his biography. (I don’t blame him for being worried about this, to be honest.)

He and Altman joined forces to create OpenAI. In an interview at the time of the launch, in 2015, Musk said,

As you know, I’ve had some concerns about AI for some time. And I’ve had many conversations with Sam and with Reid [Hoffman], Peter Thiel, and others. And we were just thinking, “Is there some way to insure, or increase, the probability that AI would develop in a beneficial way?” And as a result of a number of conversations, we came to the conclusion that having a 501c3, a non-profit, with no obligation to maximize profitability, would probably be a good thing to do. And also we’re going to be very focused on safety.

Was this a real fear? Probably not, according to a lot of people, like Berkeley professor Ben Recht:

"Anyone who believed that Elon Musk and Sam Altman were forming something for the good of mankind with a non-profit were fooling themselves," says Recht. "It's this weird Silicon Valley vanity project."

Regardless, things started out really well. OpenAI hired high-profile people like Sutskever, and Greg Brockman, the former CTO of Stripe, to work on a wide variety of problems. Within the first year, they testified to Congress on AI and released OpenAI Gym, a platform for developing reinforcement learning algorithms. Reinforcement learning is a prominent tool used in training robots and other types of artificial intelligence.

Then, things started to get squirrely. By 2018, OpenAI was still producing research like Gpt-2 language models that were able to more accurately generate natural language, but Musk left the project. He claims he left to avoid a conflict of interests between OpenAI and Tesla,

“As Tesla continues to become more focused on AI, this will eliminate a potential future conflict for Elon,” says the post. Musk will stay on as a donator to OpenAI and will continue to advise the group.

At the same time,

The blog post also announced a number of new donors, including video game developer Gabe Newell, Skype founder Jaan Tallinn, and the former US and Canadian Olympians Ashton Eaton and Brianne Theisen-Eaton. OpenAI said it was broadening its base of funders in order to ramp up investments in “our people and the compute resources necessary to make consequential breakthroughs in artificial intelligence.”

What happened was that OpenAI, despite having a billion dollars, was having a hard time competing with companies that owned clouds, like Google.

The non-profit was at a distinct disadvantage compared to the corporate A.I. labs, many of which are part of companies with massive cloud computing businesses and therefore don't have to pay market rates for server time.

Things got really interesting at this juncture:

To compete with big corporations and startups with huge reserves of venture capital funding, OpenAI's board made a fateful decision: if it couldn't beat the corporates, it would join them. In March, Altman announced that OpenAI would create a separate for-profit company, called OpenAI LP, that would seek outside capital.

The organization published a blog post on Monday announcing OpenAI LP, a new entity that it’s calling a “capped-profit” company. The company will still focus on developing new technology instead of selling products, according to the blog post, but it wants to make more money while doing so — muddying the future of a group that Musk founded to create AI that would be “beneficial to humanity.”

…

With the capped-profit structure that it created, investors can earn up to 100 times their investment but no more than that. The rest of the money that the company generates will go straight to its ongoing nonprofit work, which will continue as an organization called OpenAI Nonprofit.

Finally, topping all of the interesting news, in the summer of 2019, Microsoft invested $1 billion dollars into OpenAI, effectively rendering the non-profit as an arm of Microsoft.

What’s going to happen? For starters, OpenAI will be spending a lot on Microsoft cloud services, as Brockman said on Hacker News:

(I’m guessing since AWS was an original investor, that they were using that initially and now will have to migrate? Apparently the future of humanity looks a lot like companies throwing millions of dollars at Linux servers in the cloud, in which case, I’ve been living in the future for most of my career. )

All of this starts to make a whole lot of sense when you see where the cloud providers are going, which is falling all over themselves to make it look like they’re doing ethical AI.

Why? So they don’t catch the attention of regulators itchy to make an example of companies doing Bad Things, and also to soothe the whims of a public that is finally becoming wary of Big Tech.

Google learned its lesson here with Project Maven earlier this year, which was slated to review US Department of Defense drone footage, but the project ended up not going through after documents were leaked to the Intercept about it. As a result, the company tried to create an Ethics in AI board, which was cancelled after just a week when some of its members were found to be members of conservative think tanks.

Microsoft has been doing better on this front. They have a splashy department called AI for Good, which does things like help whales(?) based on their homepage. They’ve done the legwork.

They’ve disbanded political contributions (although, under pressure, but still):

Hell, they’ve even met with the Pope to discuss implications of AI. (This should probably be a standard Normcore disclaimer, but, as Dave Barry says, I am not making any of this up.)

So in this spirit, OpenAI is the perfect fit for MSFT. As a cloud provider, they’re arguable in second place behind AWS, and the more examples of groundbreaking AI they can provide as case studies on their platform, the more startups (who strongly prefer AWS or GCP) will want to move there.

This business will prove to be super lucrative for them, because training models for AI is EXPENSIVE - in money, time, and, potentially, in environmental costs.

For example, for the Rubik’s cube, it took about 10,000 years of simulated training to get to a model that works 60% of the time in an extremely controlled environment, with an extremely specific use case.

Imagine how much money Azure can rake in if everyone’s training robots, and if they know that Azure is the go-to platform for this.

And, all of this is good for Microsoft for another, very strategic reason: it provides good PR air cover for all of the other stuff the company is doing.

If you skim the tech press, you’ll find plenty of stories about hating companies like Google, Facebook, Uber, and Apple. However, on the Microsoft front, every single story is about how amazing the turnaround has been, or about Satya.

Among the five most valuable tech companies, Microsoft is the only one to avoid sustained public criticism about contributing to social ills in the last couple of years. At the same time, Satya Nadella, its chief executive, and Brad Smith, its president, have emerged as some of the most outspoken advocates in the industry for protecting user privacy and establishing ethical guidelines for new technology like artificial intelligence.

Or, they’ve been about speculations about their recent acquisitions, such as GitHub and LinkedIn (speaking of LinkedIn, remember how Reid Hoffman, the former CEO, was involved in OpenAI? He’s now a board member at Microsoft. )

With regards to their investment in OpenAI, no one’s said, “Oh great, Microsoft is now going to weaponize drones.”

But why not? They’re doing plenty of borderline questionable stuff. For example, just recently, an investigation came out about Microsoft’s collaboration with the Israeli government in doing AI facial and body surveillance:

And, of course, the biggest news is that recently Microsoft won an enormous US government contract named groan JEDI,

The US government has awarded a giant $10 billion cloud contract to Microsoft, the Department of Defense has confirmed. Known as Joint Enterprise Defense Infrastructure (JEDI), the contract will provide the Pentagon with cloud services for basic storage and power all the way up to artificial intelligence processing, machine learning, and the ability to process mission-critical workloads.

With a billion dollar-investment, I find it hard to see how OpenAI can avoid getting pulled into whatever Microsoft wants it to do. Right now, it’s doing research that its researchers want, ostensibly. But when you expect returns, you can get companies to do all sorts of things. For example, work on AI for the Department of Defense. And maybe, if you’re OpenAI, you feel the pressure to go faster and release more results. So maybe, OpenAI will find itself having to release more and more attention-grabbing headlines, like this Rubik’s cube piece, in order for Microsoft to be appeased.

Ultimately, it’s extremely ironic that an organization that was meant to be value-neutral and to investigate the potential benefits and harms of AI for humanity might end up filling the role that Google left off in project Maven and, say pick up with building military robots. Like this one:

Or it might not. But Normcore is skeptical, and reminds you to also be skeptical. Don’t watch the Rubik’s cube. Watch closely the hand manipulating it. And I’m not talking about the robot hand, Dactyl.

Art: Robot with C10 Grill Tie, David Michael Hinnebusch, 2017

What I’m reading lately

New logo for Python’s Pandas (what’s a better way to trigger perfectionist data people than to tell them to discuss a new logo?)

I’m really enjoying Andrew’s newsletter, which I like to think of as “Normcore ways to save money.”

My fair city of Philadelphia was one of the earliest examples of city planning in the US

This post from a while ago about a man who had never used a computer

Great discussion:

Looking for some big wins that I've somehow missed.

About the Author and Newsletter

I’m a data scientist in Philadelphia. Most of my free time is spent wrangling a preschooler and an infant, reading, and writing bad tweets. I also have longer opinions on things. Find out more here or follow me on Twitter.

This newsletter is about issues in tech that I’m not seeing covered in the media or blogs and want to read about. It goes out once a week to free subscribers, and once more to paid subscribers. If you like this newsletter, forward it to friends!