The rise of the non-expert expert

Art: Profit I, Jean-Michel Basquiat, 1982

I’ve been thinking a lot recently about what it was like to write code 20, 30 years ago.

At the time, one of my favorite tech writers, Ellen Ullman, was working as a developer and project manager and wrote an amazing book, “Close to the Machine”, about the art of software development, life, philosophy, and everything in between.

In one of the chapters, she tells the story of a meeting she had with a vice-president of a big bank, in charge of re-engineering global transaction processing payments.

The vast network of banks, automated tellers, clearinghouses, computers, phone lines - all which go into sending a single credit card transaction around the globe - was her domain.

“If it all breaks down,” she said, “the banks can’t balance their accounts.”

…

A wave of nausea washed over me: I imagined what it would feel like to leave a bug lying around and wind up being responsible for shutting down banks around the world.”

….

[Ullman mentions that the VP said only three developers worked on the entire system. ]

The vice president laughed. “We’re lucky to have them. The system is written in assembler.”

“It’s in assembler?” I felt a true, physical sickness. “Assembler?” Low-level code. One step above machine language. Hard to write, harder to change. Over time, the comments begin to outnumber the programming statements, but it does no good. No one can read it anyway.

When the vice president saw my sympathies, she relaxed. Now she wanted to talk to me about all the groovy new technologies being tried out by a special programming group. Multitiered client-server, object-oriented systems, consumer networks - all the cool stuff that was on the opposite side of the universe from her fifteen-year-assembler code.

What was it like to work all those years ago, to write code? I don’t know.

But Ullman and Neal Stephenson do.

In the beginning, Stephenson writes, there was a command line. He explains that computers, at their core, are moving information around.

The smallest unit of information is a byte. A byte is just a string of eight 1s or 0s. Here’s what it looks like:

01011100When you have lots of bytes, you have bytecode. For example, here’s Normcore Tech in bytecode:

01001110 01101111 01110010 01101101 01100011 01101111 01110010 01100101 00100000 01010100 01100101 01100011 01101000It is this bytecode that the machine can read, and that makes up all of our software and moves our information through the internet.

How it started

We write computer programs for a number of reasons, but, ultimately, all of them are converting higher-level information that humans can understand (text, tweets, video), to bytecode, moving it around, and then converting the bytes again until they are human-legible.

People used to have to do this kind of conversion very manually,

We had a human/computer interface a hundred years before we had computers. When computers came into being around the time of the Second World War, humans, quite naturally, communicated with them by simply grafting them on to the already-existing technologies for translating letters into bits and vice versa: teletypes and punch card machines.

These embodied two fundamentally different approaches to computing. When you were using cards, you'd punch a whole stack of them and run them through the reader all at once, which was called batch processing.

Now (or rather, 1999, the vantage point from where Stephenson writes),

[T]he first job that any coder needs to do when writing a new piece of software is to figure out how to take the information that is being worked with (in a graphics program, an image; in a spreadsheet, a grid of numbers) and turn it into a linear string of bytes. These strings of bytes are commonly called files or (somewhat more hiply) streams. They are to telegrams what modern humans are to Cro-Magnon man, which is to say the same thing under a different name. All that you see on your computer screen--your Tomb Raider, your digitized voice mail messages, faxes, and word processing documents written in thirty-seven different typefaces--is still, from the computer's point of view, just like telegrams, except much longer, and demanding of more arithmetic.

The early years of programming were mainly focused on moving from this really physical programming infrastructure, of writing bits of logic on punchcards and running them through enormous machines, with the first programming language being assembly, the language that horrified Ullman so much. Assembly is very close to bytecode.

As the machines became smaller and more accessible, more programming languages came about. By the late 1980s or early 1990s, when Ullman was well into her career, your average programmer might know, in addition to assembler, COBOL, SQL, Fortran, and maybe the newcomer, C.

In a lot of ways, the world was much smaller and less complex. We didn’t even have version control! But, even though the amount of languages was smaller, the information was harder to get to. There was no Stack Overflow. There were reference books, man pages, and online forums. In the early days, programming and finding information about programming was not easy.

I didn’t get help from user groups with programming, but they were around. They’d meet monthly, and there were certainly people there who knew programming. I mean, if you were a computer owner back then, there was an expectation that you knew at least a little about programming, even if it was just writing batch files in DOS. You couldn’t use the computers that much without having some technical knowledge about how to get them to do what you wanted, because the idea of an easy-to-use GUI was still new.

In the early days, it was easier to remember something than to look something up and software development took much longer to propagate, oftentimes on floppy disks that took a long time to release. For example, Windows 3.1 was 6 floppies that would have to be inserted in order, like a highly-arcane magical incantation, for it all to work. So, deep knowledge in any one of these languages and skill areas was very highly-valued.

Most programming happened at the command line, in very similar ways to what Ullman and Stephenson describe: programming alone, in the dark, with only the brief flashes of light of user groups to help, using archaic old languages or new languages with puzzling features.

The meteoric rise of the internet created an opportunity both for different kinds of languages to evolve: Javascript as a platform for building the internet, the rise of Java for mainstream web services, and Python as a multipurpose web and data language.

The internet also meant that information about those languages could move be shared more quickly, gaining more followers and spreading across companies through blog posts and talks that could now be streamed online, thanks to services like YouTube that took advantage of the increase in available bandwidth.

Along with the fact that it became easier to write the internet, it also became easier to collect the exhaust trails of people reading it, to harvest the data that the backends of the internet spewed forth into data lakes. With the growth of connected machines, has come an exponential proliferation of tools, cloud environments with thousands of services, different orchestration infrastructures, agile, kanban, scrumban (yes), and millions of developer conferences for each possible topic under the sun.

Before, you were pretty limited in both your hardware and software environments. These days, you have your pick of 3-4 IDEs per language, different environments for moving that code to the cloud, integrating it with your other code, multiple applications, hundreds and hundreds of different ways to do the same thing.

How it’s going

We’re now in an interesting situation that’s really well-illustrated by the multifaceted responses to this tweet.

In the replies, dozens of people who are pretty senior say that there is a lot they don’t know in their day jobs, and there is a lot they’re trying to figure out.

Threads like this are often meant to empower people earlier in their careers. But it also made me think about something I’ve been turning over for some time now. Because we have so many different software products, services, languages, and steps to production for any given piece of software, there is no such thing as a senior developer anymore.

Or rather, there is no such thing as a senior developer who has both the depth required in earlier development environments, and now, the breadth required from the modern software stack. As we’ve grown from mailing floppies, to a wide range of areas areas across the scope of the shipping side of the development cycle: front-end, back-end, integration testing, the cloud, IoT, ops (monitoring), and a million other areas that I’m probably forgetting.

Look at the number of tools available for ONE area of the software development lifecycle alone. This is not even for writing any of the code, this is for devops, the meta-control of the code where you monitor how code runs.

In the earlier days, developers could afford themselves a lot of depth, because there was less to know. You could sit and think about some particularly thorny C code for a while, because you didn’t also need to be learning React, Kubernetes, Tensorflow, GitHub, GitLab, and whatever the latest announcements from aws: Reinvent are.

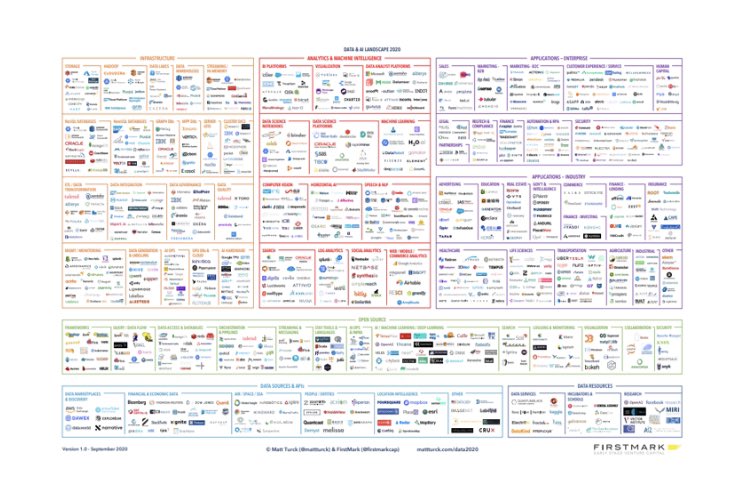

Take, for another example, the machine learning landscape these days. I did a joke-tweet riff off Matt Turk’s yearly posting of the current landscape for ML-related tooling, but, as in every joke, there is always a grain of truth:

A person coming into a data position cannot be expected to know even a fraction of all these tools, each one of them written in their own language and with their own nuances.

Chip recently tweeted that she would focus on these areas if she were to start learning MLE again:

I agree with many of them, but it’s impossible that every machine learning engineer knows all of these areas, or is strong in all of them, or even has a need for all of them at work.

For example, I’ve never (yet) used Dask or Kubernetes, I’ve only grazed data structures, and I use ML algorithms much less in my work than I’d like to. But out of that list, version control, unit/integration tests, SQL, Python,and REST APIs make up a great deal of my day-to-day landscape.

I cannot imagine how overwhelming this list looks to a person starting in industry. It doesn’t even get into the subtopics of each category. For example, under Machine Learning Algorithms, you could potentially be looking at upwards of 20 or 30 algorithms. I’ve used maybe 4 or 5 in my entire career, repeatedly.

I’d venture to say that most technical people fall into this realm: knowing a core handful of 7-8 things pretty well. For everything else, there’s Google and O’Reilly.

So what?

What used to distinguish senior people from junior people was the depth of knowledge they had about any given programming language and operating system, and the amount of time

What distinguishes them now is breadth and, I think, the ability to discern patterns and carry them across multiple parts of a stack, multiple stacks, and multiple jobs working in multiple industries. We are all junior, now, in some part of the software stack. The real trick is knowing which part that is.

Of course, this is my bias as a former consultant coming in, but I truly think that the role of a senior developer now is that of an internal advisor drawing from a mental card catalog of previous incidents and products to offer advice and speculation about how the next one might go, and if they don’t know, what kinds of questions they need to ask to find out.

If you’ve worked with Kafka before, you’re not going to have any problems with Kinesis, and you’ll find Flink to be similar enough to get going. If you’ve used Ruby, Python’s not going to be a problem. If you’ve used cron and YAML, you’ll enjoy GitHub Actions. If you’ve used Spark, starting with Dask probably won’t be an issue.

In other worlds, what I think is most important for senior people to have these days is a solid grasp of a couple languages and the fundamentals that transcend those languages. Here’s an example:

Of course, it’s always been the case that software engineering has been as much about puzzling out the logic as writing the actual code. But <movie announcer voice> in a world where we have five million and ten data processing frameworks, </voice> this distinction and ability to reason through why something might work or not becomes even more important.

In other words, to break down the systems and platforms to their core principles, to their ones and their zeros, and be able to reapply those principles elsewhere, is the new knowing the command line. (Oh, but you should also know the command line, too.)

What I’m reading lately:

I wrote about how I taught Python to beginners a few weeks ago

I just finished a biography of Golda Meir; strong recommend.

I am also reading, for an escape, The Blue Castle and it’s lovely

I also recommend Ted Lasso! It’s a gem of a TV show.

Aphyr has a series of posts on the technical interview. I understand almost none of them, but I aspire to this level of technical writing.

The Newsletter:

This newsletter’s M.O. is takes on tech news that are rooted in humanism, nuance, context, rationality, and a little fun. It goes out once or twice a week. If you like it, forward it to friends and tell them to subscribe!

The Author:

I’m a machine learning engineer. Most of my free time is spent wrangling a kindergartner and a toddler, reading, and writing bad tweets. Find out more here or follow me on Twitter.